Unlocking the Secrets of R1-Zero: A Deep Dive into a Novel Training Approach

The world of machine learning is a whirlwind of innovation, with new techniques and architectures emerging at a dizzying pace. One area that's generating significant buzz is R1-Zero training, a method designed to improve the efficiency and performance of neural networks. But what exactly is R1-Zero, and why should you care? This post aims to demystify this intriguing approach, providing a practical guide and a critical perspective on its strengths and limitations.

What is R1-Zero Training? Breaking Down the Basics

At its core, R1-Zero training, as detailed in the paper available on GitHub ( https://github.com/sail-sg/understand-r1-zero ), revolves around a specific regularization technique. Regularization is a crucial component of training neural networks, preventing overfitting and improving generalization to unseen data. R1-Zero achieves this by adding a penalty term to the loss function. This penalty discourages large weight magnitudes, thus promoting simpler, more robust models.

The key difference, and the source of the "Zero" in R1-Zero, lies in how this penalty is applied. Unlike traditional L1 regularization (also known as Lasso), which penalizes the absolute value of the weights, R1-Zero targets the gradients of the weights. This subtle shift has significant implications for how the network learns and adapts.

Think of it this way: Imagine you're trying to sculpt a statue. Standard L1 regularization is like having a chisel that removes material uniformly. R1-Zero, on the other hand, is like using a very precise laser cutter that targets specific areas of the statue, controlling the flow of material based on the gradient. This allows for a more nuanced and potentially more effective approach to regularization.

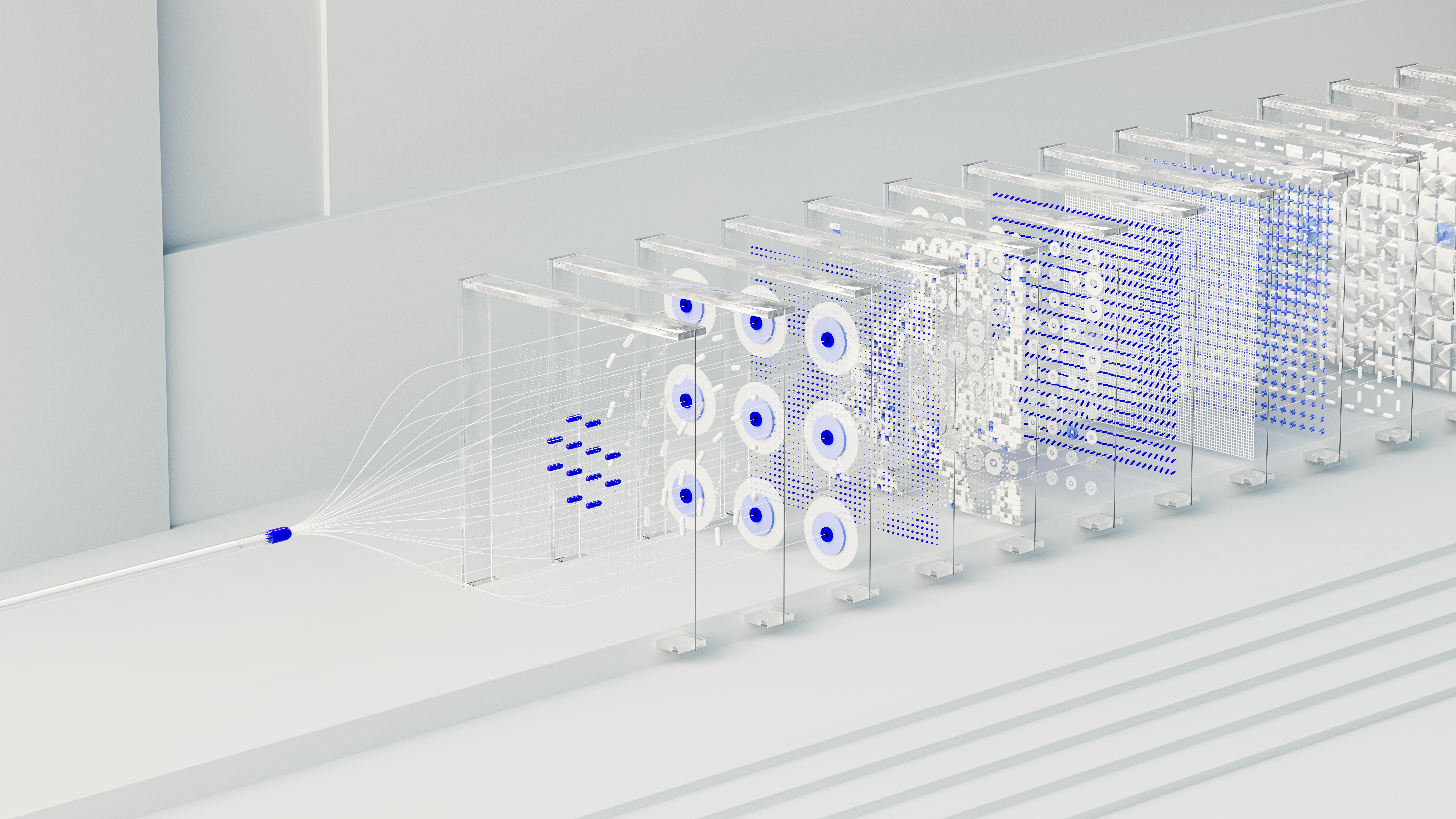

How R1-Zero Works: A Step-by-Step Explanation

Let's break down the process with a simplified, yet informative, guide:

- Forward Pass: The input data is fed through the network. The weights are multiplied by the input data, and the results are passed through activation functions.

- Loss Calculation: The network's predictions are compared to the ground truth labels, and a loss function (e.g., mean squared error, cross-entropy) is calculated. This loss represents the error the network needs to minimize.

- Gradient Calculation: Backpropagation is used to calculate the gradient of the loss with respect to each weight in the network. This gradient indicates how much each weight contributed to the overall error.

- R1-Zero Penalty Application: This is the core of the technique. Instead of simply penalizing the weight magnitudes themselves, R1-Zero calculates a penalty based on the gradient of each weight. The specific formula is detailed in the original paper, but the core idea is to encourage smaller gradient values.

- Weight Update: The weights are updated using an optimization algorithm (e.g., stochastic gradient descent) with the added R1-Zero penalty. This means the weights are adjusted in a way that minimizes both the original loss and the penalty term.

- Iteration: Steps 1-5 are repeated for multiple training epochs until the network converges or a stopping criterion is met.

Practical Implementation: Considerations and Code Snippets (Conceptual)

Implementing R1-Zero requires careful consideration of the penalty term and its integration into the optimization process. While the specific implementation details depend on the chosen deep learning framework (TensorFlow, PyTorch, etc.), here’s a conceptual snippet to illustrate the key idea (this is not runnable code, but a demonstration of the principle):

def r1_zero_penalty(weights, gradients, lambda_param):

# Calculate the R1-Zero penalty.

# (Simplified example, actual implementation might vary).

penalty = lambda_param sum(gradient*2 for gradient in gradients)

return penalty

def train_step(model, optimizer, inputs, labels, lambda_param):

with tf.GradientTape() as tape: # TensorFlow Example

predictions = model(inputs)

loss = calculate_loss(predictions, labels)

# Get the gradients

gradients = tape.gradient(loss, model.trainable_variables)

# Apply R1-Zero penalty

r1_penalty = r1_zero_penalty(model.trainable_variables, gradients, lambda_param)

total_loss = loss + r1_penalty

# Apply gradients with optimizer.

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

return total_loss

In this conceptual snippet, the `r1_zero_penalty` function calculates the penalty based on the gradients. The `train_step` function then adds this penalty to the original loss before applying the gradients. The `lambda_param` is a hyperparameter controlling the strength of the regularization.

Analyzing the Advantages: What R1-Zero Brings to the Table

According to the research, R1-Zero offers several potential benefits:

- Improved Generalization: By promoting simpler models, R1-Zero can reduce overfitting and lead to better performance on unseen data.

- Enhanced Robustness: The regularization can make the network less sensitive to noisy or irrelevant features in the data.

- Potential for Feature Selection: Similar to L1 regularization, R1-Zero might encourage some weights to become zero, effectively performing feature selection.

- Efficiency: Depending on the implementation, R1-Zero may not significantly increase the computational cost of training compared to other regularization techniques.

Critical Perspectives: Addressing the Limitations and Challenges

While R1-Zero holds promise, it's crucial to approach it with a critical eye. Here are some potential limitations and challenges:

- Hyperparameter Tuning: The strength of the R1-Zero penalty (the `lambda_param`) is a hyperparameter that needs to be carefully tuned. This can add complexity to the training process.

- Implementation Complexity: Implementing R1-Zero, especially in custom architectures, might require a deeper understanding of the underlying math and gradient calculations.

- Empirical Validation: The effectiveness of R1-Zero can vary depending on the specific dataset, architecture, and task. Thorough empirical validation is essential.

- Comparison to other Regularization Techniques: It's important to compare R1-Zero's performance against other established regularization methods like dropout, weight decay (L2 regularization), and batch normalization to determine its relative advantages.

Case Studies and Examples: Where R1-Zero Shines

While specific case studies are not explicitly mentioned in the initial GitHub repository ( https://github.com/sail-sg/understand-r1-zero ), the success of R1-Zero will likely depend on its ability to enhance performance in specific tasks. Potential applications include:

- Image Classification: In scenarios where the dataset is limited or noisy, R1-Zero could help prevent overfitting and improve accuracy.

- Natural Language Processing (NLP): R1-Zero could be beneficial in tasks like text classification and sentiment analysis.

- Medical Image Analysis: Improved generalization is crucial in medical applications where data scarcity and noise are common.

The comments on Hacker News ( https://news.ycombinator.com/item?id=43445894 ) may offer additional insights into the practical applications and perceived benefits of this approach from a community perspective.

Conclusion: Embracing the Potential of R1-Zero

R1-Zero training represents an intriguing development in the field of regularization. While it’s not a silver bullet, its focus on gradient-based penalty offers a novel approach to improving the performance and robustness of neural networks. By understanding the underlying principles, the implementation details, and the potential limitations, practitioners can effectively evaluate and potentially leverage this technique to enhance their models.

Key Takeaways:

- R1-Zero is a regularization technique that penalizes the gradients of the weights.

- It aims to improve generalization, robustness, and potentially feature selection.

- Implementation requires careful consideration of the penalty term and hyperparameter tuning.

- Thorough empirical validation is critical to assess its effectiveness.

- R1-Zero is a promising approach, but further research and experimentation are needed to fully realize its potential.

As the field of deep learning continues to evolve, exploring and evaluating innovative techniques like R1-Zero is essential for staying at the forefront of this rapidly changing landscape. Keep an eye on future research and publications to stay informed about its ongoing development and impact.

This post was published as part of my automated content series.

Comments